|

Vision research showed remarkable success in understanding our world, propelled by datasets of images and videos.

Sensor data from radar, LiDAR and cameras supports research in robotics and autonomous driving for at least a decade.

However, while visual sensors may fail in some conditions, sound has recently shown potential to complement sensor data.

Simulated room impulse responses (RIR) in 3D apartment-models became a benchmark dataset for the community, fostering a range of audiovisual research.

In simulation, depth is predictable from sound, by learning bat-like perception with a neural network.

Concurrently, the same was achieved in reality by using RGB-D images and echoes of chirping sounds.

Biomimicking bat perception is an exciting new direction but needs dedicated datasets to explore the potential.

Therefore, we collected the BatVision dataset to provide large-scale echoes in complex real-world scenes to the community.

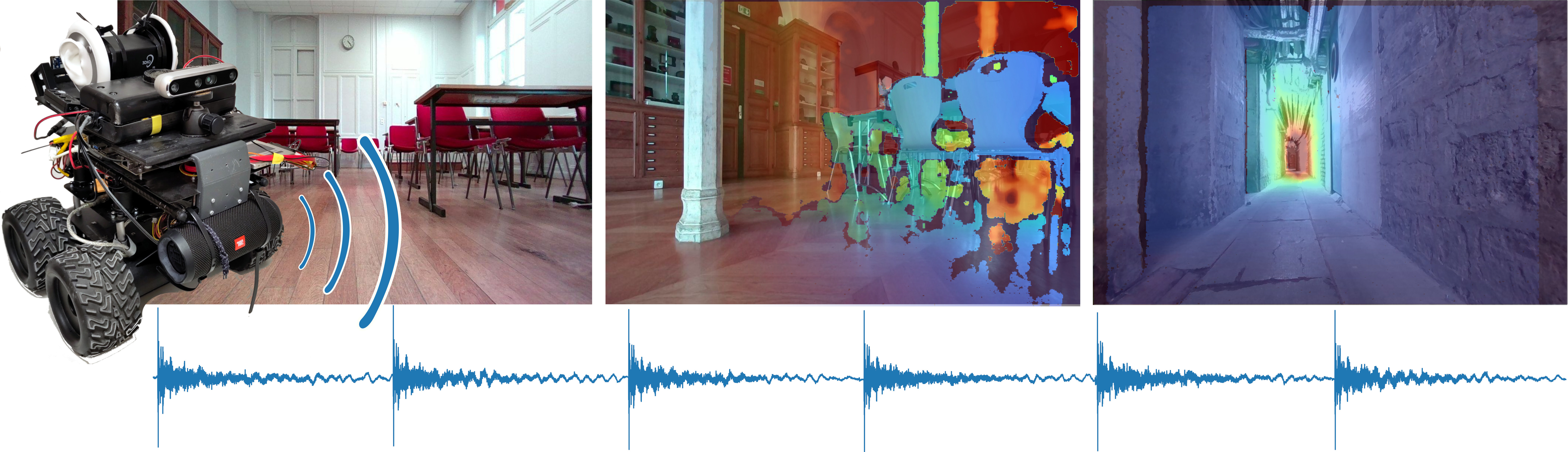

We equipped a robot with a speaker to emit chirps and a binaural microphone to record their echoes.

Synchronized RGB-D images from the same perspective provide visual labels of traversed spaces.

We sampled modern US office spaces to historic French university grounds, indoor and outdoor with large architectural variety.

This dataset will allow research on robot echolocation, general audio-visual tasks and sound phænomena unavailable in simulated data.

We show promising results for audio-only depth prediction and show how state-of- the-art work developed for simulated data can also succeed on our dataset.

|