Publications

2025

-

One Wave to Explain Them All: A Unifying Perspective on Post-hoc ExplainabilityIn Forty-second International Conference on Machine Learning, 2025

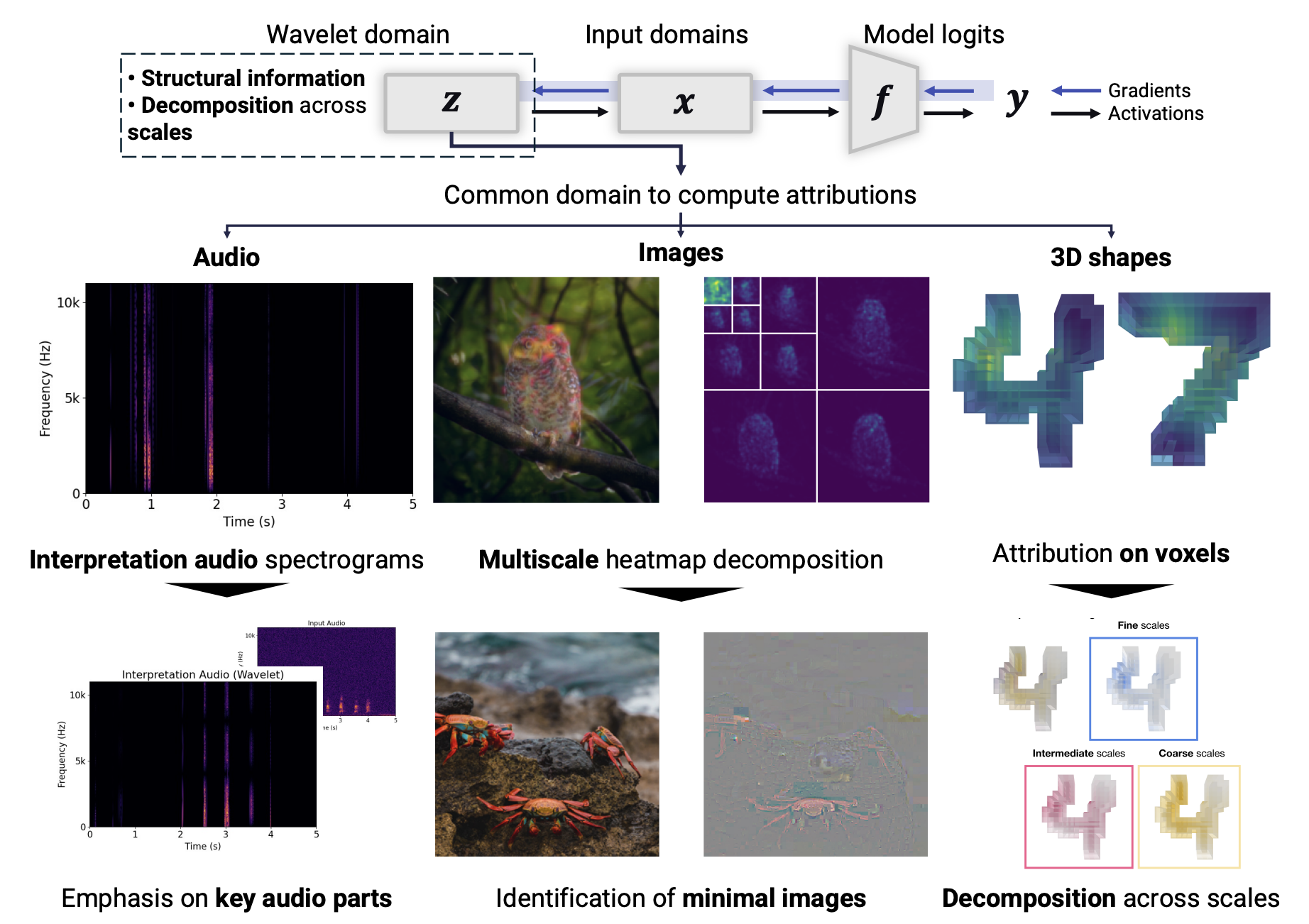

One Wave to Explain Them All: A Unifying Perspective on Post-hoc ExplainabilityIn Forty-second International Conference on Machine Learning, 2025Despite the growing use of deep neural networks in safety-critical decision-making, their inherent black-box nature hinders transparency and interpretability. Explainable AI (XAI) methods have thus emerged to understand a model’s internal workings, and notably attribution methods also called saliency maps. Conventional attribution methods typically identify the locations – the where – of significant regions within an input. However, because they overlook the inherent structure of the input data, these methods often fail to interpret what these regions represent in terms of structural components (e.g., textures in images or transients in sounds). Furthermore, existing methods are usually tailored to a single data modality, limiting their generalizability. In this paper, we propose leveraging the wavelet domain as a robust mathematical foundation for attribution. Our approach, the Wavelet Attribution Method (WAM) extends the existing gradient-based feature attributions into the wavelet domain, providing a unified framework for explaining classifiers across images, audio, and 3D shapes. Empirical evaluations demonstrate that WAM matches or surpasses state-of-the-art methods across faithfulness metrics and models in image, audio, and 3D explainability. Finally, we show how our method explains not only the where – the important parts of the input – but also the what – the relevant patterns in terms of structural components.

@inproceedings{kasmi2024one, title = {One Wave to Explain Them All: A Unifying Perspective on Post-hoc Explainability}, author = {Kasmi, Gabriel and Brunetto, Amandine and Fel, Thomas and Parekh, Jayneel}, booktitle = {Forty-second International Conference on Machine Learning}, year = {2025}, url = {https://openreview.net/forum?id=njZ5oVPObS}, } -

NeRAF: 3D Scene Infused Neural Radiance and Acoustic FieldsAmandine Brunetto, Sascha Hornauer, and Fabien MoutardeIn The Thirteenth International Conference on Learning Representations, 2025

NeRAF: 3D Scene Infused Neural Radiance and Acoustic FieldsAmandine Brunetto, Sascha Hornauer, and Fabien MoutardeIn The Thirteenth International Conference on Learning Representations, 2025Sound plays a major role in human perception. Along with vision, it provides essential information for understanding our surroundings. Despite advances in neural implicit representations, learning acoustics that align with visual scenes remains a challenge. We propose NeRAF, a method that jointly learns acoustic and radiance fields. NeRAF synthesizes both novel views and spatialized room impulse responses (RIR) at new positions by conditioning the acoustic field on 3D scene geometric and appearance priors from the radiance field. The generated RIR can be applied to auralize any audio signal. Each modality can be rendered independently and at spatially distinct positions, offering greater versatility. We demonstrate that NeRAF generates high-quality audio on SoundSpaces and RAF datasets, achieving significant performance improvements over prior methods while being more data-efficient. Additionally, NeRAF enhances novel view synthesis of complex scenes trained with sparse data through cross-modal learning. NeRAF is designed as a Nerfstudio module, providing convenient access to realistic audio-visual generation.

@inproceedings{brunetto2025neraf, title = {Ne{RAF}: 3D Scene Infused Neural Radiance and Acoustic Fields}, author = {Brunetto, Amandine and Hornauer, Sascha and Moutarde, Fabien}, booktitle = {The Thirteenth International Conference on Learning Representations}, year = {2025}, url = {https://openreview.net/forum?id=njvSBvtiwp}, }

2024

-

PANO-ECHO: PANOramic depth prediction enhancement with ECHO featuresXiaohu Liu, Amandine Brunetto, Sascha Hornauer, Fabien Moutarde, and Jialiang LuIn IEEE Conference on Artificial Intelligence (CAI), 2024

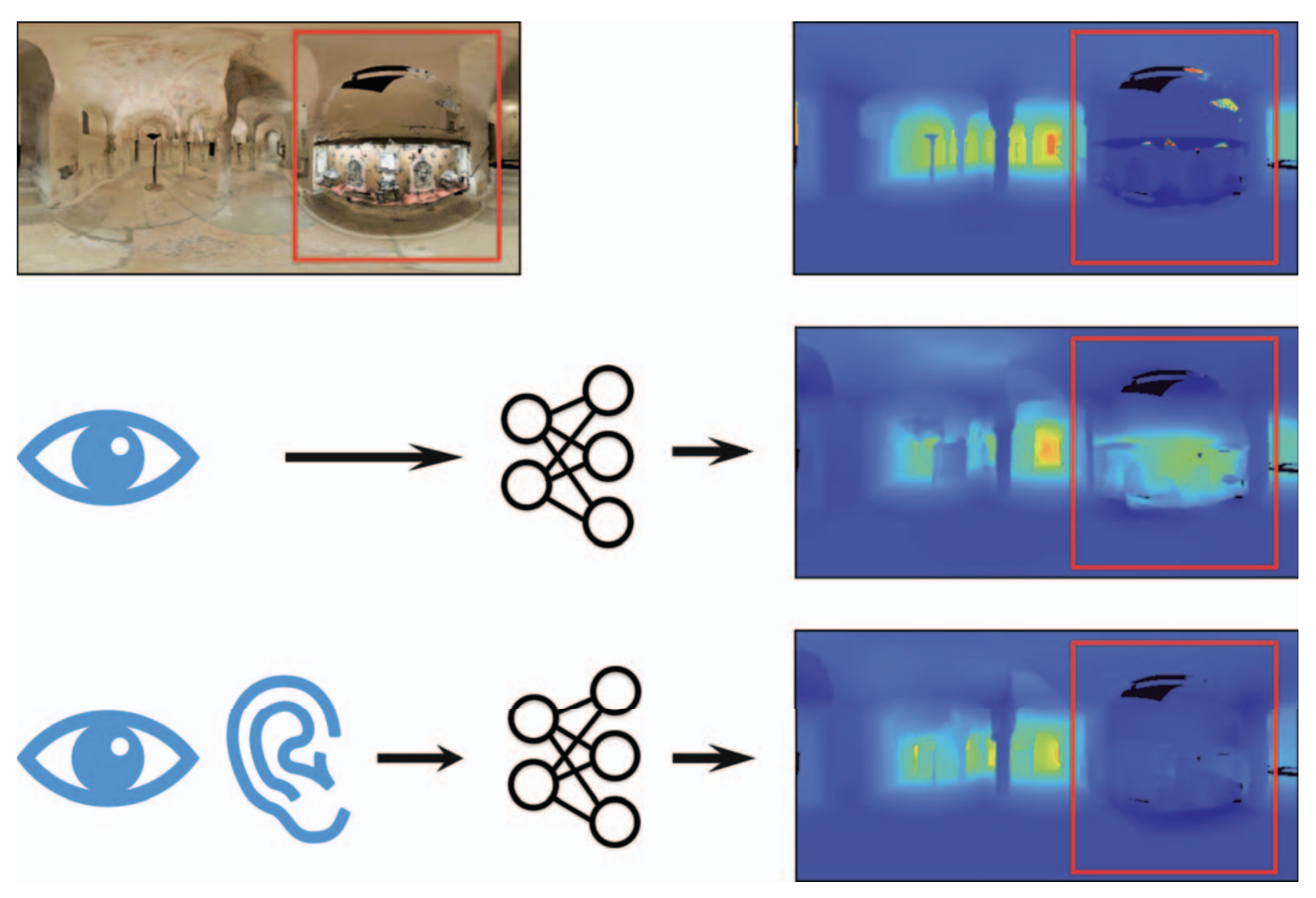

PANO-ECHO: PANOramic depth prediction enhancement with ECHO featuresXiaohu Liu, Amandine Brunetto, Sascha Hornauer, Fabien Moutarde, and Jialiang LuIn IEEE Conference on Artificial Intelligence (CAI), 2024Panoramic depth estimation gains importance with more 360° images being widely available. However, traditional mono-to-depth approaches, optimized for a limited field of view, show subpar performance when naively adapted. Methods tailored to process panoramic input improve predictions but can not overcome ambiguous visual information and scale-uncertainty inherent to the task. In this paper we show the benefits of leveraging sound for improved panoramic depth estimation. Specifically, we harness audible echoes from emitted chirps as they contain rich geometric and material cues about the surrounding environment. We show that these auditory cues can enhance a state-of-the-art panoramic depth prediction framework. By integrating sound information, we improve this vision-only baseline by ≈ 12%. Our approach requires minimal modifications to the underlying architecture, making it easily applicable to other baseline models. We validate its efficacy on the Matterport3D and Replica datasets, demonstrating remarkable improvements in depth estimation accuracy

@inproceedings{liu2024pano, title = {PANO-ECHO: PANOramic depth prediction enhancement with ECHO features}, author = {Liu, Xiaohu and Brunetto, Amandine and Hornauer, Sascha and Moutarde, Fabien and Lu, Jialiang}, booktitle = {IEEE Conference on Artificial Intelligence (CAI)}, pages = {1063--1070}, year = {2024}, doi = {10.1109/CAI59869.2024.00193}, organization = {IEEE}, }

2023

-

The Audio-Visual BatVision Dataset for Research on Sight and SoundIn IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023

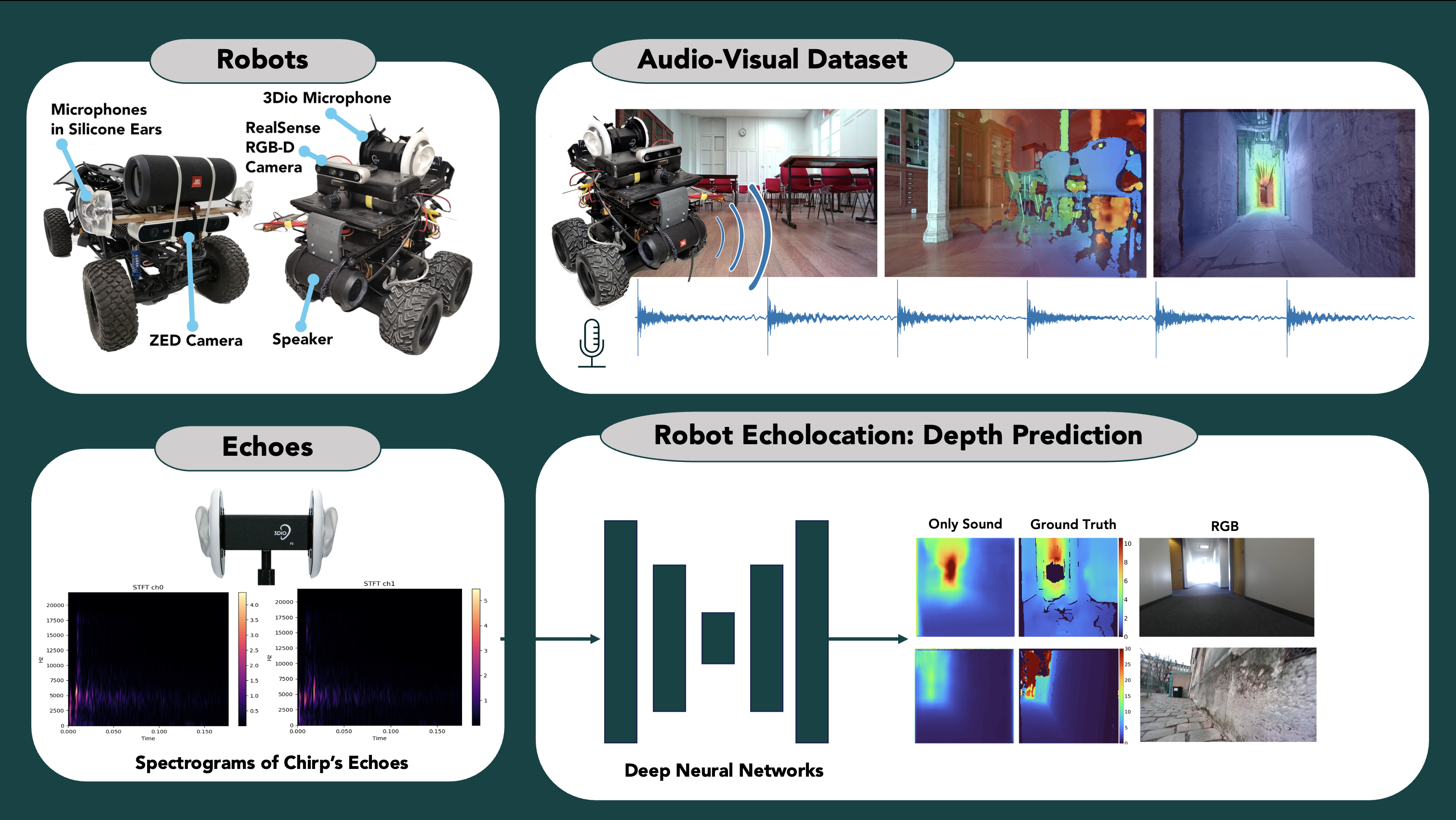

The Audio-Visual BatVision Dataset for Research on Sight and SoundIn IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023Vision research showed remarkable success in understanding our world, propelled by datasets of images and videos. Sensor data from radar, LiDAR and cameras supports research in robotics and autonomous driving for at least a decade. However, while visual sensors may fail in some conditions, sound has recently shown potential to complement sensor data. Simulated room impulse responses (RIR) in 3D apartment-models became a benchmark dataset for the community, fostering a range of audiovisual research. In simulation, depth is predictable from sound, by learning bat-like perception with a neural network. Concurrently, the same was achieved in reality by using RGB-D images and echoes of chirping sounds. Biomimicking bat perception is an exciting new direction but needs dedicated datasets to explore the potential. Therefore, we collected the BatVision dataset to provide large-scale echoes in complex real-world scenes to the community. We equipped a robot with a speaker to emit chirps and a binaural microphone to record their echoes. Synchronized RGB-D images from the same perspective provide visual labels of traversed spaces. We sampled modern US office spaces to historic French university grounds, indoor and outdoor with large architectural variety. This dataset will allow research on robot echolocation, general audio-visual tasks and sound phænomena unavailable in simulated data. We show promising results for audio-only depth prediction and show how state-of-the-art work developed for simulated data can also succeed on our dataset.

@inproceedings{brunetto2023batvision, author = {Brunetto, Amandine and Hornauer, Sascha and Yu, Stella X. and Moutarde, Fabien}, booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, title = {The Audio-Visual BatVision Dataset for Research on Sight and Sound}, year = {2023}, volume = {}, number = {}, pages = {1-8}, doi = {10.1109/IROS55552.2023.10341715}, }